Table of contents

- Introduction

- 🎯 Goals

- 🚀 Getting Started with VideoSDK

- 🔌 Integrate VideoSDK

- 🎥 Essential Steps to Add Video in Your App

- 🖥️ Integrate Screen Sharing Feature

- Create Broadcast Upload Extension in iOS

- Step 1: Open Target

- Step 2: Select Target

- Step 3: Configure Broadcast Upload Extension

- Step 4: Activate Extension scheme

- Step 5: Add External file in Created Extension

- Step 6: Update SampleHandler.swift file

- Step 7: Add Capability in the App

- Step 8: Add Capability in Extension

- Step 9: Add App Group ID in Extension File

- Step 10: Update App levelinfo.plist file

- Create an iOS Native Module for RPSystemBroadcastPickerView

- Enable Screen Share

- Disable Screen Share

- Rendering Screen Share Stream

- Events Associated with Screen Share

- 🔚 Conclusion

Introduction

In today's digital world, good communication and teamwork are crucial. As people look for more effective methods to connect, video conferencing solutions have become indispensable. Screen sharing has evolved into an integral element of modern communication and collaboration platforms, allowing users to share their device displays with others during meetings, presentations, and distant collaborations. In this article, we'll look at how to smoothly incorporate Screen Share capabilities with VideoSDK.

🎯 Goals

By the end of this article, we'll:

Create a VideoSDK account and generate your VideoSDK auth token.

Integrate the VideoSDK library and dependencies into your project.

Implement core functionalities for video calls using VideoSDK.

Enable Screen Sharing feature.

🚀 Getting Started with VideoSDK

To take advantage of the Screen Sharing functionality, we must use the capabilities that the VideoSDK offers. Before diving into the implementation steps, let's ensure you complete the necessary prerequisites.

Create a VideoSDK Account

Go to your VideoSDK dashboard and sign up if you don't have an account. This account gives you access to the required Video SDK token, which acts as an authentication key that allows your application to interact with VideoSDK functionality.

Generate your Auth Token

Visit your VideoSDK dashboard and navigate to the "API Key" section to generate your auth token. This token is crucial in authorizing your application to use VideoSDK features.

For a more visual understanding of the account creation and token generation process, consider referring to the provided tutorial.

Prerequisites and Setup

Make sure your development environment meets the following requirements:

Node.js v12+

NPM v6+ (comes installed with newer Node versions)

Android Studio or Xcode installed

🔌 Integrate VideoSDK

Install the VideoSDK by using the following command. Ensure that you are in your project directory before running this command.

- For NPM

npm install "@videosdk.live/react-native-sdk" "@videosdk.live/react-native-incallmanager"

- For Yarn

yarn add "@videosdk.live/react-native-sdk" "@videosdk.live/react-native-incallmanager"

Project Configuration

Before integrating the Screen Share functionality, ensure that your project is correctly prepared to handle the integration. This setup consists of a sequence of steps for configuring rights, dependencies, and platform-specific parameters so that VideoSDK can function seamlessly inside your application context.

iOS Setup

Ensure that you are using CocoaPods version 1.10 or later.

1. To update CocoaPods, you can reinstall gem using the following command:

$ sudo gem install cocoapods

2. Manually link react-native-incall-manager (if it is not linked automatically).

Select Your_Xcode_Project/TARGETS/BuildSettings, in Header Search Paths, add "$(SRCROOT)/../node_modules/@videosdk.live/react-native-incall-manager/ios/RNInCallManager"

3. Change the path of react-native-webrtc using the following command:

pod ‘react-native-webrtc’, :path => ‘../node_modules/@videosdk.live/react-native-webrtc’

4. Change the version of your platform.

You need to change the platform field in the Podfile to 12.0 or above because react-native-webrtc doesn't support iOS versions earlier than 12.0. Update the line: platform: ios, ‘12.0’.

5. Install pods.

After updating the version, you need to install the pods by running the following command:

Pod install

6. Add “libreact-native-webrtc.a” binary.

Add the "libreact-native-webrtc.a" binary to the "Link Binary With Libraries" section in the target of your main project folder.

7. Declare permissions in Info.plist :

Add the following lines to your info.plist file located at:

<key>NSCameraUsageDescription</key>

<string>Camera permission description</string>

<key>NSMicrophoneUsageDescription</key>

<string>Microphone permission description</string>

ios/projectname/info.plist

Register Service

Register VideoSDK services in your root index.js file for the initialization service.

import { AppRegistry } from "react-native";

import App from "./App";

import { name as appName } from "./app.json";

import { register } from "@videosdk.live/react-native-sdk";

register();

AppRegistry.registerComponent(appName, () => App);

index.js

🎥 Essential Steps to Add Video in Your App

By following essential steps, you can seamlessly implement video into your applications.

Step 1: Get started with api.js

Before moving on, you must create an API request to generate a unique meetingId. You will need an authentication token, which you can create either through the videosdk-rtc-api-server-examples or directly from the VideoSDK Dashboard for developers.

export const token = "<Generated-from-dashbaord>";

// API call to create meeting

export const createMeeting = async ({ token }) => {

const res = await fetch(`https://api.videosdk.live/v2/rooms`, {

method: "POST",

headers: {

authorization: `${token}`,

"Content-Type": "application/json",

},

body: JSON.stringify({}),

});

const { roomId } = await res.json();

return roomId;

};

api.js

Step 2: Wireframe App.js with all the components

To build up a wireframe of App.js, you need to use VideoSDK Hooks and Context Providers. VideoSDK provides MeetingProvider, MeetingConsumer, useMeeting, and useParticipant hooks.

First, you need to understand the Context of Provider and Consumer. Context is primarily used when some data needs to be accessible by many components at different nesting levels.

MeetingProvider: This is the Context Provider. It accepts value

configandtokenas props. The Provider component accepts a value prop to be passed to consuming components that are descendants of this Provider. One Provider can be connected to many consumers. Providers can be nested to override values deeper within the tree.MeetingConsumer: This is the Context Consumer. All consumers that are descendants of a Provider will re-render whenever the Provider’s value prop changes.

useMeeting: This is the meeting hook API. It includes all the information related to meetings such as join/leave, enable/disable the mic or webcam, etc.

useParticipant: This is the participant hook API. It is responsible for handling all the events and props related to one particular participant such as name, webcamStream, micStream, etc...

The Meeting Context provides a way to listen for any changes that occur when a participant joins the meeting or makes modifications to their microphone, camera, and other settings.

Begin by making a few changes to the code in the App.js file.

import React, { useState } from "react";

import {

SafeAreaView,

TouchableOpacity,

Text,

TextInput,

View,

FlatList,

} from "react-native";

import {

MeetingProvider,

useMeeting,

useParticipant,

MediaStream,

RTCView,

} from "@videosdk.live/react-native-sdk";

import { createMeeting, token } from "./api";

function JoinScreen(props) {

return null;

}

function ControlsContainer() {

return null;

}

function MeetingView() {

return null;

}

export default function App() {

const [meetingId, setMeetingId] = useState(null);

const getMeetingId = async (id) => {

const meetingId = id == null ? await createMeeting({ token }) : id;

setMeetingId(meetingId);

};

return meetingId ? (

<SafeAreaView style={{ flex: 1, backgroundColor: "#F6F6FF" }}>

<MeetingProvider

config={{

meetingId,

micEnabled: false,

webcamEnabled: true,

name: "Test User",

}}

token={token}

>

<MeetingView />

</MeetingProvider>

</SafeAreaView>

) : (

<JoinScreen getMeetingId={getMeetingId} />

);

}

App.js

Step 3: Implement Join Screen

The join screen will serve as a medium to either schedule a new meeting or join an existing one.

function JoinScreen(props) {

const [meetingVal, setMeetingVal] = useState("");

return (

<SafeAreaView

style={{

flex: 1,

backgroundColor: "#F6F6FF",

justifyContent: "center",

paddingHorizontal: 6 * 10,

}}

>

<TouchableOpacity

onPress={() => {

props.getMeetingId();

}}

style={{ backgroundColor: "#1178F8", padding: 12, borderRadius: 6 }}

>

<Text style={{ color: "white", alignSelf: "center", fontSize: 18 }}>

Create Meeting

</Text>

</TouchableOpacity>

<Text

style={{

alignSelf: "center",

fontSize: 22,

marginVertical: 16,

fontStyle: "italic",

color: "grey",

}}

>

---------- OR ----------

</Text>

<TextInput

value={meetingVal}

onChangeText={setMeetingVal}

placeholder={"XXXX-XXXX-XXXX"}

style={{

padding: 12,

borderWidth: 1,

borderRadius: 6,

fontStyle: "italic",

}}

/>

<TouchableOpacity

style={{

backgroundColor: "#1178F8",

padding: 12,

marginTop: 14,

borderRadius: 6,

}}

onPress={() => {

props.getMeetingId(meetingVal);

}}

>

<Text style={{ color: "white", alignSelf: "center", fontSize: 18 }}>

Join Meeting

</Text>

</TouchableOpacity>

</SafeAreaView>

);

}

JoinScreen Component

Step 4: Implement Controls

The next step is to create a ControlsContainer component to manage features such as Join or leave a Meeting and Enable or Disable the Webcam/Mic.

In this step, the useMeeting hook is utilized to acquire all the required methods such as join(), leave(), toggleWebcam and toggleMic.

const Button = ({ onPress, buttonText, backgroundColor }) => {

return (

<TouchableOpacity

onPress={onPress}

style={{

backgroundColor: backgroundColor,

justifyContent: "center",

alignItems: "center",

padding: 12,

borderRadius: 4,

}}

>

<Text style={{ color: "white", fontSize: 12 }}>{buttonText}</Text>

</TouchableOpacity>

);

};

function ControlsContainer({ join, leave, toggleWebcam, toggleMic }) {

return (

<View

style={{

padding: 24,

flexDirection: "row",

justifyContent: "space-between",

}}

>

<Button

onPress={() => {

join();

}}

buttonText={"Join"}

backgroundColor={"#1178F8"}

/>

<Button

onPress={() => {

toggleWebcam();

}}

buttonText={"Toggle Webcam"}

backgroundColor={"#1178F8"}

/>

<Button

onPress={() => {

toggleMic();

}}

buttonText={"Toggle Mic"}

backgroundColor={"#1178F8"}

/>

<Button

onPress={() => {

leave();

}}

buttonText={"Leave"}

backgroundColor={"#FF0000"}

/>

</View>

);

}

ControlsContainer Component

function ParticipantList() {

return null;

}

function MeetingView() {

const { join, leave, toggleWebcam, toggleMic, meetingId } = useMeeting({});

return (

<View style={{ flex: 1 }}>

{meetingId ? (

<Text style={{ fontSize: 18, padding: 12 }}>

Meeting Id :{meetingId}

</Text>

) : null}

<ParticipantList />

<ControlsContainer

join={join}

leave={leave}

toggleWebcam={toggleWebcam}

toggleMic={toggleMic}

/>

</View>

);

}

MeetingView Component

Step 5: Render Participant List

After implementing the controls, the next step is to render the joined participants.

You can get all the joined participants from the useMeeting Hook.

function ParticipantView() {

return null;

}

function ParticipantList({ participants }) {

return participants.length > 0 ? (

<FlatList

data={participants}

renderItem={({ item }) => {

return <ParticipantView participantId={item} />;

}}

/>

) : (

<View

style={{

flex: 1,

backgroundColor: "#F6F6FF",

justifyContent: "center",

alignItems: "center",

}}

>

<Text style={{ fontSize: 20 }}>Press Join button to enter meeting.</Text>

</View>

);

}

ParticipantList Component

function MeetingView() {

// Get `participants` from useMeeting Hook

const { join, leave, toggleWebcam, toggleMic, participants } = useMeeting({});

const participantsArrId = [...participants.keys()];

return (

<View style={{ flex: 1 }}>

<ParticipantList participants={participantsArrId} />

<ControlsContainer

join={join}

leave={leave}

toggleWebcam={toggleWebcam}

toggleMic={toggleMic}

/>

</View>

);

}

MeetingView Component

Step 6: Handling Participant's Media

Before Handling the Participant's Media, you need to understand a couple of concepts.

1. useParticipant Hook

The useParticipant hook is responsible for handling all the properties and events of one particular participant who joined the meeting. It will take participantId as argument.

const { webcamStream, webcamOn, displayName } = useParticipant(participantId);

useParticipant Hook Example

2. MediaStream API

The MediaStream API is beneficial for adding a MediaTrack into the RTCView component, enabling the playback of audio or video.

<RTCView

streamURL={new MediaStream([webcamStream.track]).toURL()}

objectFit={"cover"}

style={{

height: 300,

marginVertical: 8,

marginHorizontal: 8,

}}

/>

useParticipant Hook Example

Rendering Participant Media

function ParticipantView({ participantId }) {

const { webcamStream, webcamOn } = useParticipant(participantId);

return webcamOn && webcamStream ? (

<RTCView

streamURL={new MediaStream([webcamStream.track]).toURL()}

objectFit={"cover"}

style={{

height: 300,

marginVertical: 8,

marginHorizontal: 8,

}}

/>

) : (

<View

style={{

backgroundColor: "grey",

height: 300,

justifyContent: "center",

alignItems: "center",

}}

>

<Text style={{ fontSize: 16 }}>NO MEDIA</Text>

</View>

);

}

ParticipantView Component

Congratulations! By following these steps, you're on your way to unlocking the video within your application. Now, we are moving forward to integrate the feature that builds immersive video experiences for your users!

🖥️ Integrate Screen Sharing Feature

Adding the Screen Share functionality to your application improves cooperation by allowing users to share their device screens during meetings or presentations. It allows everyone in the conference to view precisely what you see on your screen, which is useful for presentations, demos, and collaborations.

Create Broadcast Upload Extension in iOS

Step 1: Open Target

Open your project with Xcode, then select File > New > Target in the menu bar.

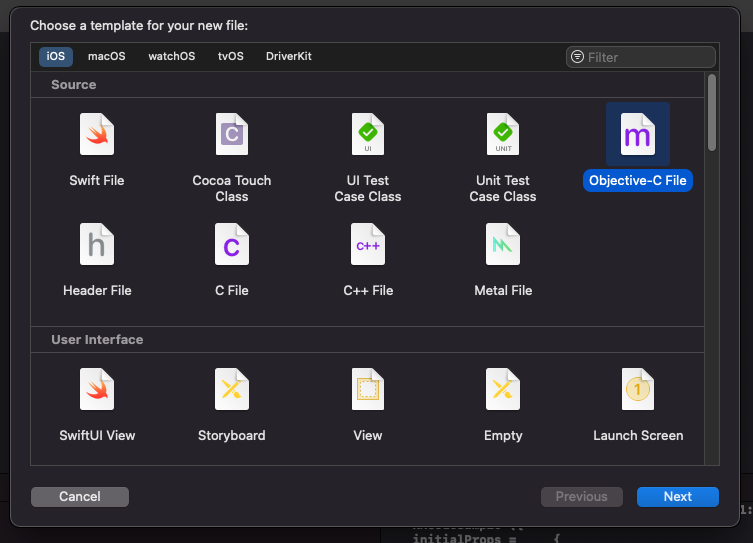

Step 2: Select Target

Select Broadcast Upload Extension and click next.

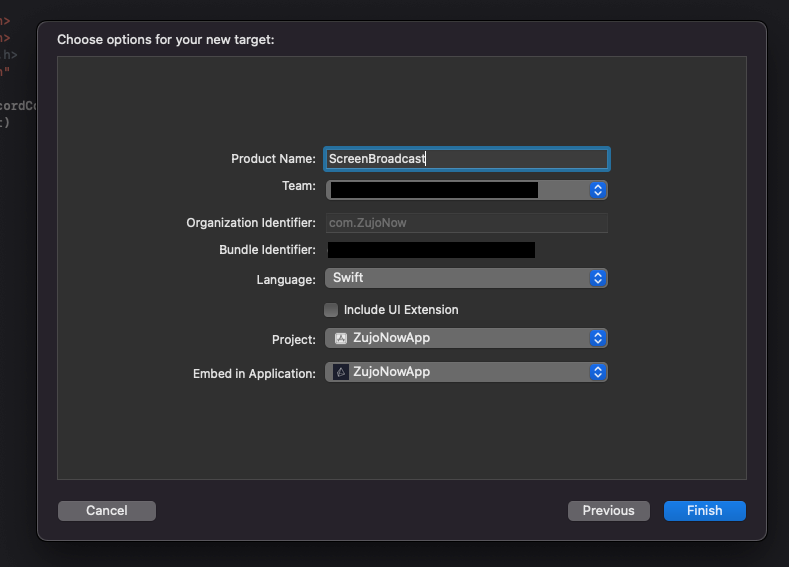

Step 3: Configure Broadcast Upload Extension

Enter the extension's name in the Product Name field, choose the team from the dropdown, uncheck the "Include UI extension" field, and click "Finish."

Step 4: Activate Extension scheme

You will be prompted with a popup: Activate "Your-Extension-name" scheme? click on activate.

Now, the "Broadcast" folder will appear in the Xcode left sidebar.

Step 5: Add External file in Created Extension

Open the videosdk-rtc-react-native-sdk-example repository, and copy the following files: SampleUploader.swift, SocketConnection.swift, DarwinNotificationCenter.swift, and Atomic.swift to your extension's folder. Ensure that these files are added to the target.

Step 6: Update SampleHandler.swift file

Open SampleHandler.swift, and copy the content of the file. Paste this content into your extension's SampleHandler.swift file.

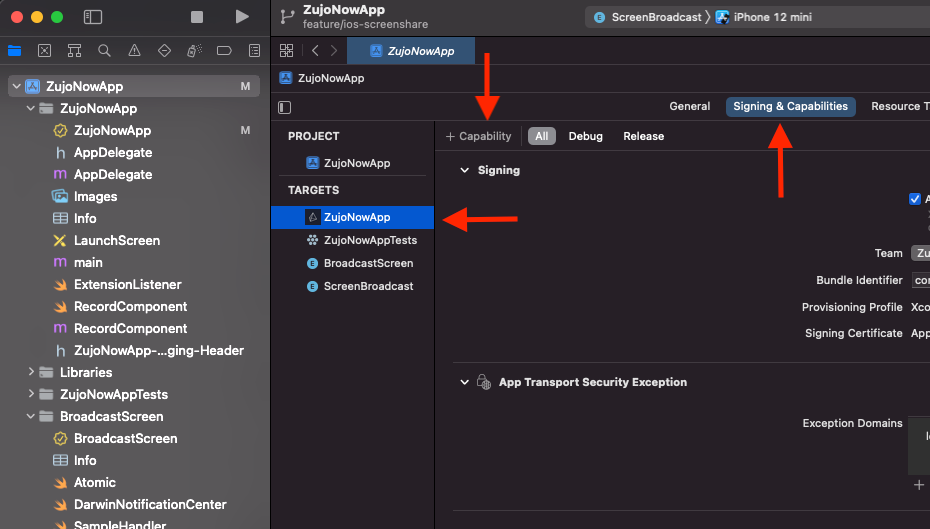

Step 7: Add Capability in the App

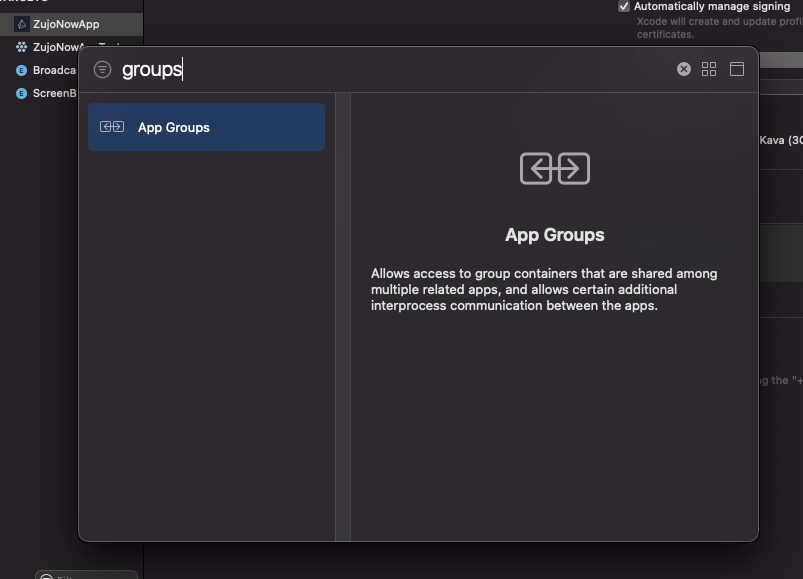

In Xcode, navigate to YourappName > Signing & Capabilities, and click on +Capability to configure the app group.

Choose App Groups from the list.

After that, select or add the generated App Group ID that you have created before.

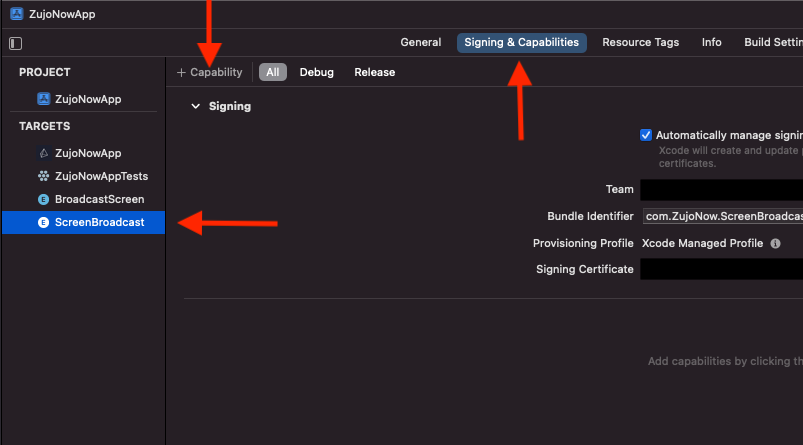

Step 8: Add Capability in Extension

Go to Your-Extension-Name > Signing & Capabilities and configure App Group functionality which we had performed in previous steps. (Group id should be same for both targets).

Step 9: Add App Group ID in Extension File

Go to the extension's SampleHandler.swift file and paste your group ID into the appGroupIdentifier constant.

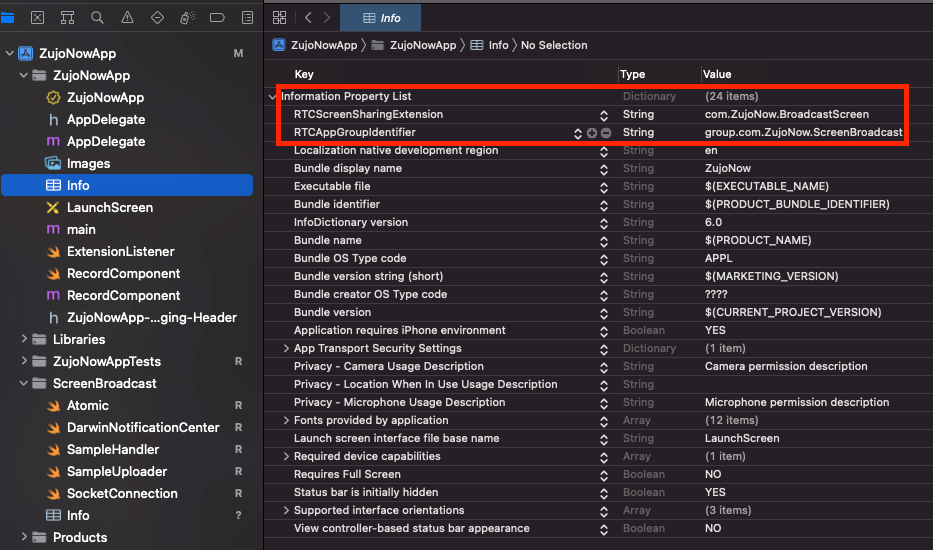

Step 10: Update App levelinfo.plist file

Add a new key RTCScreenSharingExtension in Info.plist with the extension's Bundle Identifier as the value.

Add a new key RTCAppGroupIdentifier in Info.plist with the extension's App groups Id as the value.

Note: For the extension's Bundle Identifier, go to TARGETS > Your-Extension-Name > Signing & Capabilities .

You can also check out the extension's example code on github.

Create an iOS Native Module for RPSystemBroadcastPickerView

Step 1: Add Files to iOS Project

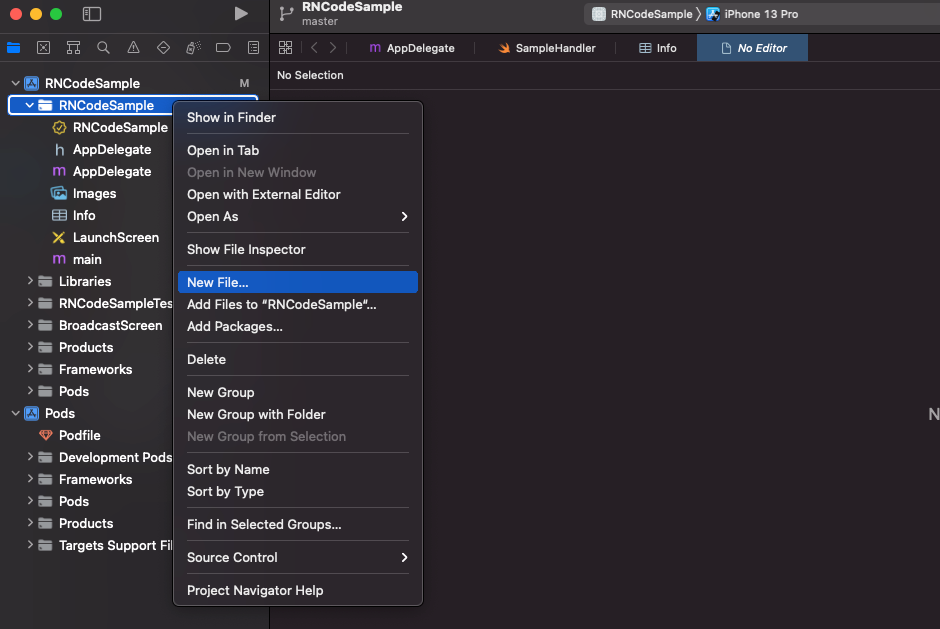

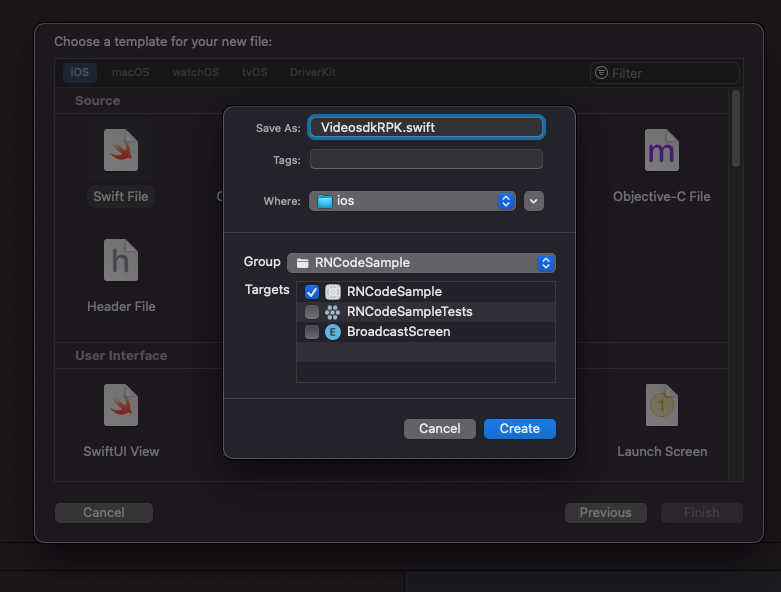

Go to Xcode > Your App and create a new file named VideosdkRPK.swift.

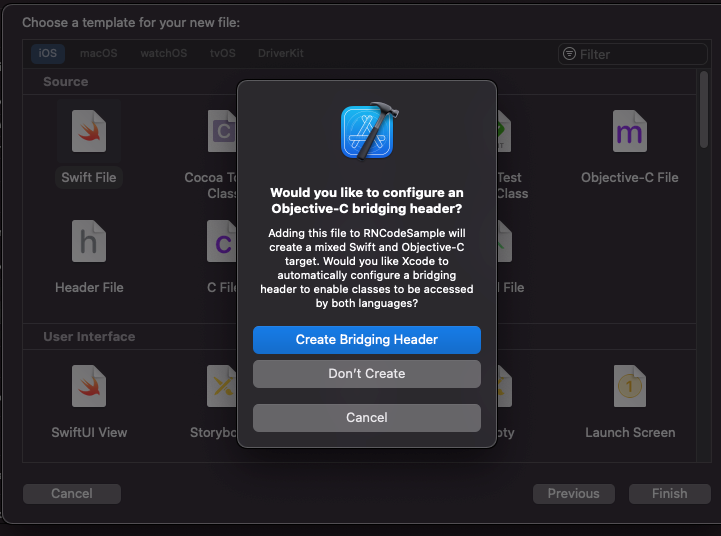

After clicking the Create button, it will prompt you to create a bridging header.

After creating the bridging header file, create an Objective-c file named VideosdkRPK.

For the

VideosdkRPK.swiftfile, copy the content from here.In the

Appname-Bridging-Headerfile, add the line#import "React/RCTEventEmitter.h".For the

VideosdkRPK.mfile, copy the content from here.

Step 2: Integrate the Native Module on React native side

Create a file named

VideosdkRPK.jsand copy the content from here.Add the lines given below for handling the enable and disable screen share event.

Enable Screen Share

By using the

enableScreenShare()function of theuseMeetinghook, the local participant can share their mobile screen with other participants.The Screen Share stream of a participant can be accessed from the

screenShareStreamproperty of theuseParticipanthook.

Disable Screen Share

- By using the

disableScreenShare()function of theuseMeetinghook, the local participant can stop sharing their mobile screen with other participants.

import React, { useEffect } from "react";

import VideosdkRPK from "../VideosdkRPK";

import { TouchableOpacity, Text } from "react-native";

const { enableScreenShare, disableScreenShare } = useMeeting({});

useEffect(() => {

VideosdkRPK.addListener("onScreenShare", (event) => {

if (event === "START_BROADCAST") {

enableScreenShare();

} else if (event === "STOP_BROADCAST") {

disableScreenShare();

}

});

return () => {

VideosdkRPK.removeSubscription("onScreenShare");

};

}, []);

return (

<>

<TouchableOpacity

onPress={() => {

// Calling startBroadcast from iOS Native Module

VideosdkRPK.startBroadcast();

}}

>

<Text> Start Screen Share </Text>

</TouchableOpacity>

<TouchableOpacity

onPress={() => {

disableScreenShare();

}}

>

<Text> Stop Screen Share </Text>

</TouchableOpacity>

</>

);

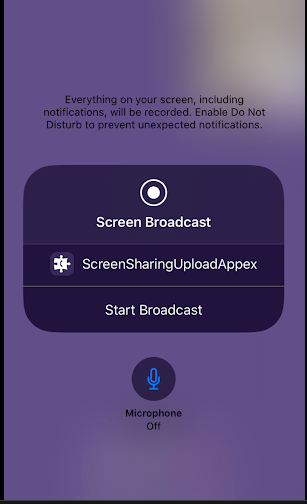

The VideosdkRPK.startBroadcast() method produces the following result.

After clicking the Start Broadcast button, you will be able to get the screen share stream in the session.

Rendering Screen Share Stream

- To render the screenshare, you will need the

participantIdof the user presenting the screen. This can be obtained from thepresenterIdproperty of theuseMeetinghook.

function MeetingView() {

const { presenterId } = useMeeting({});

return (

<View style={{ flex: 1 }}>

//..

{presenterId && <PresenterView presenterId={presenterId} />}

<ParticipantList />

// ...

</View>

);

}

const PresenterView = ({ presenterId }) => {

return <Text>PresenterView</Text>;

};

- Now that you have the

presenterId, you can obtain thescreenShareStreamusing theuseParticipanthook and play it in theRTCViewcomponent.

const PresenterView = ({ presenterId }) => {

const { screenShareStream, screenShareOn } = useParticipant(presenterId);

return (

<>

// playing the media stream in the RTCView

{screenShareOn && screenShareStream ? (

<RTCView

streamURL={new MediaStream([screenShareStream.track]).toURL()}

objectFit={"contain"}

style={{

flex: 1,

}}

/>

) : null}

</>

);

};

Events Associated with Screen Share

Events associated withenableScreenShare

Every Participant will receive a callback on

onStreamEnabled()event of theuseParticipant()hook with theStreamobject.Every Participant will receive the

onPresenterChanged()callback of theuseMeetinghook, which provides theparticipantIdas thepresenterIdof the participant who started the screen share.

Events associated withdisableScreenShare

Every Participant will receive a callback on

onStreamDisabled()event of theuseParticipant()hook with theStreamobject.Every Participant will receive the

onPresenterChanged()callback of theuseMeetinghook, with thepresenterIdasnull, indicating that there is no current presenter.

import { useParticipant, useMeeting } from "@videosdk.live/react-native-sdk";

const MeetingView = () => {

//Callback for when the presenter changes

function onPresenterChanged(presenterId) {

if(presenterId){

console.log(presenterId, "started screen share");

}else{

console.log("someone stopped screen share");

}

}

const { participants } = useMeeting({

onPresenterChanged,

...

});

return <>...</>

}

const ParticipantView = (participantId) => {

//Callback for when the participant starts a stream

function onStreamEnabled(stream) {

if(stream.kind === 'share'){

console.log("Share Stream On: onStreamEnabled", stream);

}

}

//Callback for when the participant stops a stream

function onStreamDisabled(stream) {

if(stream.kind === 'share'){

console.log("Share Stream Off: onStreamDisabled", stream);

}

}

const {

displayName

...

} = useParticipant(participantId,{

onStreamEnabled,

onStreamDisabled

...

});

return <> Participant View </>;

}

By following the steps outlined in this guide, you can seamlessly integrate the Screen Share feature and empower your users to share their screens with ease, fostering better communication and collaboration.

🔚 Conclusion

Screen Share enables participants to easily share their ideas, presentations, and information during corporate meetings, educational sessions, or creative collaborations. With VideoSDK's Screen Share functionality, you can create immersive and engaging video call experiences that connect people from all over the world.

To unlock the full potential of VideoSDK and create easy-to-use video experiences, developers are encouraged to Sign up with VideoSDK today and Get 10000 minutes free to take the video app to the next level!